Hey again Krita animators!

For a number of months @eoinoneill and I have been working on and off on a major rewrite of Krita’s animation systems with the goal of improving the way that Krita handles animation-audio synchronization. The goal here is broad, as we want to do as much as possible to improve not only the quality and consistency of animation audio in Krita, but also to do whatever we can to improve the animation workflow for the common tasks of animating to dialogue, music, and other types of sound.

If you’re interested in the details or more closely following the development of this feature, you can of course check out the gitlab merge request here.

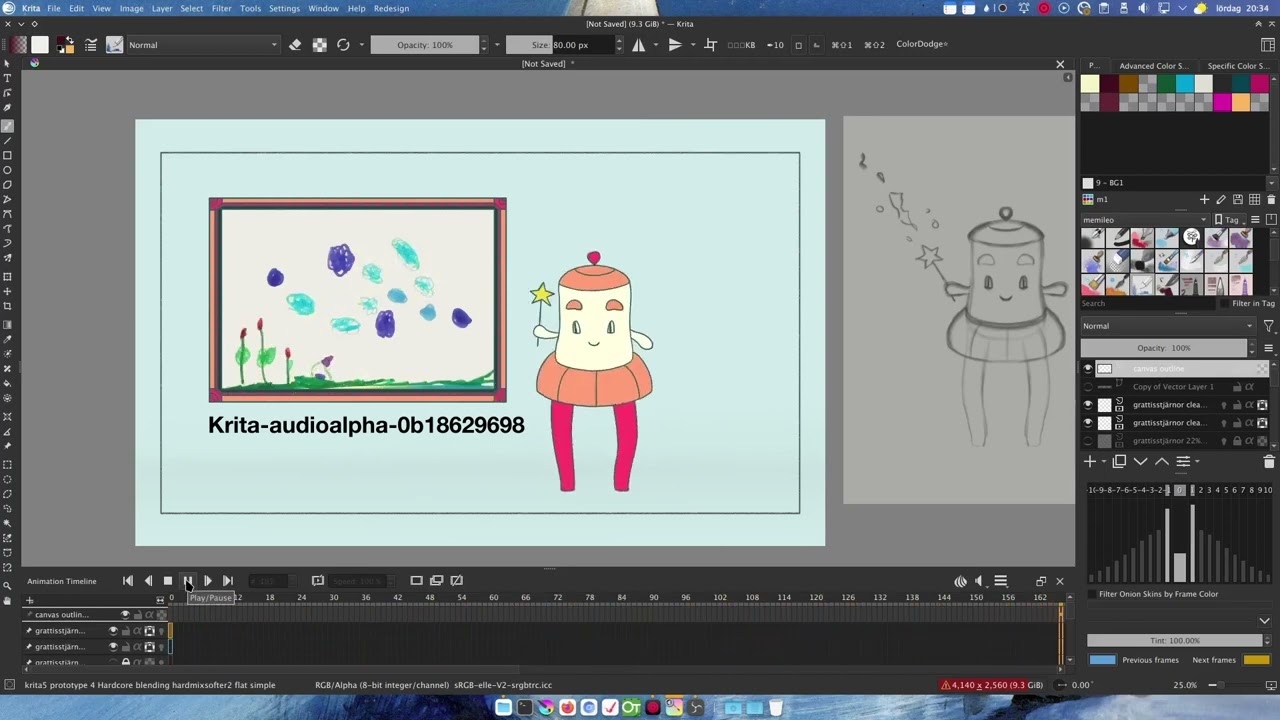

Well… While we aren’t quite there yet and are now targeting v5.2 (instead of the upcoming v5.1), we’ve decided to stop by and share an early preview with our Krita animator community here on KA, in the form of a Linux test AppImage which you can download and try out here:

Krita - Animation Audio Test AppImage #1 (Linux)

(Obviously this comes with the usual warnings and caveats about running unofficial development builds from unusual sources. Expect issues, bugs and crashes; don’t do any important work using unstable software; be careful that you’re running things from trusted sources; etc.)

(Also, sorry Windows and Mac users, this test build is only available as a Linux AppImage.)

Here’s what you should expect…

What should work solidly at this point & what is worth testing

- Animators should be able to load up a compatible audio file and animate with it as expected.

- Animation playback should work as animators expect, with a consistent synchronization between image frames and sound across a variety of playback conditions.

- Scrubbing and clicking around on the timeline should produce small chunks of audio that are easy to discern. Right now we’re pushing a 0.25sec window, which is larger than a single animation frame, because we think it’s a bit easier to work with a bit of extra context. As such, you’ll hear some overlap between frames, but this is by design for now and open to debate. (Maybe something that should be configurable… Not sure, so we’re looking forward to feedback on this point.)

- Rendering an animation to a file should work as expected in all cases. When rendering an animation with attached audio, the synchronization of audio and video should be perceptibly consistent with the playback within Krita itself. In other words, audio and video should synchronize as you’d expect in both the program and the rendered video file.

- Obviously everything should be relatively stable and functional. But this is still a development build, so it’s possible that bugs will be present. If you come across bugs, this merge request is the best place to report them for the time being.

Known issues & things that are not yet where they need to be

- Playback speed adjustment is non-functional for now and has been disabled. This is still on our to-do list as described above and I think we good idea of how to go about it under MLT, but we’re just not there yet.

- The “drop frames” button has also been disabled for now, and we’re not sure what exactly to do about that yet. In the case of audio-synchronization, the images kind of have to go along with the precise timing of the audio track. This is still an open question, but what’s most important for now is that audio/video synchronization is stable.

- We’d like to implement an audio waveform visualization track on the timeline docker to ship alongside the audio update, as it’s a great way to help placing frames in time to music and dialog, but we’re not there yet.

Where do you come in?

If you’re interested in seeing what’s new with animation audio in Krita, just give it a try and let us know what you think!

We’re really looking for all kinds of feedback and opinions about how you feel the new audio system is working, whether it seems to be an improvement over the old one so far, how well you feel that it synchronizes video and audio both inside Krita and in rendered video files, how easy it is to work with, etc.

Just go ahead and drop any thoughts you have related to audio synchronization and workflow here in this thread. The feedback that we get here will be a great way to inform our next steps when it comes to animation audio in Krita, so don’t be shy and we can hopefully get a conversation going that will lead to making Krita 5.2 a great release for animators. ![]()

P.S.: Eoin and I are going to be away for a little while for a summer break soon, so don’t worry if we go radio silent for a bit. We’ll be back! ![]()