Introduction

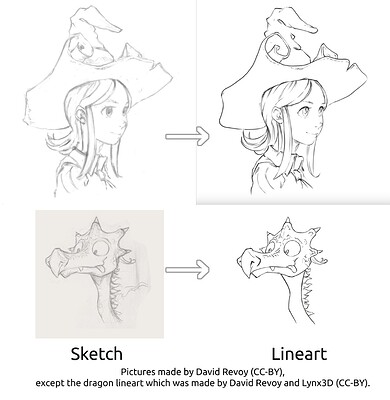

In the coming few months, we will be sharing the progress of developing a new experimental feature we’re working on, let’s call it “Fast Line Art”. It will be a tool that takes in a sketch and generates a basic line art, similar to how a Colorize Mask takes a line art and generates flat colors, which is the base of the coloring process for many artists.

The project is sponsored by Intel. They’ve sponsored several features in the past, most notably multi-threaded (faster) brushes and HDR on Windows. Thanks Intel!

(Note that sponsored development and donations are two different things: in case of sponsored development we sign a contract and develop a specific feature for a set amount of money, while with donations we have full freedom regarding how we spend the funds).

This project makes use of AI, or more precisely, neural networks (implementing it using just classical algorithms would be quite challenging). The technology itself has a very wide range, but recently it got well-known in the artistic community due to the popularity of the generative AIs like Stable Diffusion, Midjourney and Dall-e. We do understand the concerns artists have around AI, and how it’s being trained and used, and because of that we plan to do things differently (for example, we are not going to use generative AI in this project and we don’t plan on using it in the foreseeable future). I have explained our approach to it in detail below, which will hopefully alleviate your concerns.

About the Feature

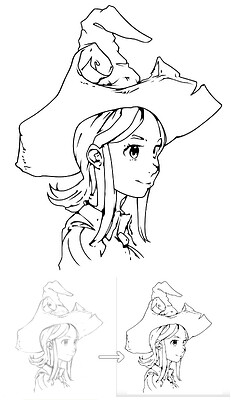

The purpose of the Fast Line Art feature is to speed up the process of getting the base, simple line art done. It removes the need to tediously trace the sketch, repeating the same stroke multiple times to get the perfect one, and allows the artist to only focus on the parts that their artstyle depends on – like eyes in anime, or cross-hatching in particular places, or adding multiple lines or thicker lines to indicate a shadow. You can make a sketch, sculpting the sketch lines with multiple strokes and an eraser to get a perfect shape in a messy manner, and the feature will translate it into the same perfect shape but in one, continuous stroke.

This is an approximate “artistic rendition” of the feature:

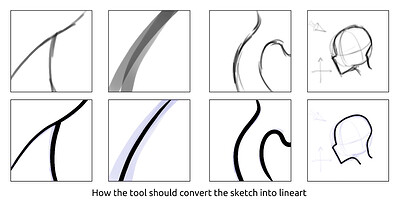

This is a close up view, showing more precisely what it’s supposed to do (merge lines together into one pretty line, discard very faint lines):

The most common brush for lineart is a solid brush with variable size. But many artists use other brushes, for example ones that look like a pencil, or a ballpoint pen, or a Sumi ink brush. This tool should be able to handle the basic brush, but I’m not sure yet whether it will be universal or only use a limited set of brushes.

The project is still in early stages, so I can’t say for sure how useful the results will be. I will be writing updates on KA along the way so you’ll learn more about what to expect from the final version of the feature.

The Role of AI in This Project

This feature will use convolutional neural networks, which, yes, it’s roughly the same technology that generative AI uses. However, there are important differences between our project and the generative AI. The most visible difference is that it isn’t designed to add/generate any details or beautify your artwork, it will closely follow the provided sketch.

Moreover, there are several issues with the current use of (especially generative) AI that are not fully resolved yet, but I sincerely believe that this particular project is not repeating them. I will try my best to address all the typical concerns with AI, but if I missed any, please let me know in the comments, and I’ll try to answer the best I can.

- It’s not a generative AI. It won’t invent anything. It won’t add details, any stylistic flourish besides basic line weight, cross-hatching or anything else. It won’t fix any mistakes. It will closely follow the provided sketch. I believe it won’t even be possible for a network of this size and architecture to “borrow” any parts of the images from the training dataset.

- We will not be training the model on any of the existing datasets, or stolen pictures. All artworks will come from artists fully aware what it’s going to be used for. And our particular model will work better with special training data anyway, I believe. Maybe you’d want to help out with gathering the artworks - I will be making another post about that soon.

- The calculations will be 100% local and offline. It won’t send the sketch image to any server to process and return the line art. I’m not planning to implement any networking functionality, and there are no servers planned either. It will only use your own computer CPU and GPU for calculations, the same way all of the other features of Krita do. It also won’t train on your images that you make in Krita. It won’t save it anywhere either, until you save it with Krita to your own device as usual, in a Krita file.

- I believe that it cannot replace artists (which is a common concern with generative AI) because you will still need to add details not included in the sketch, and your own special features (cross-hatching for example, double lines, thicker lines on the shadowy side), or you might not be able to use the tool at all if your inking brush or line art style is more unique. The purpose of this feature is strictly to only reduce the tedious, uncreative part of the process of making a line art.

- It will consist of only convolutional layers, meaning that it will only see several or several dozens of pixels in each direction. There will be no fully connected layer of nodes, so it won’t even be capable of making a whole composition, since it will work only locally. The advantage of using a fully convolutional neural network is that it will work for a picture of any size. From my understanding, the networks used for generative AI have a very different architecture (they use convolutional layers too, but also many other elements like dense blocks or complex architecture which this project won’t use).

- The model used will be relatively small (because we’re preparing for big canvases, and both RAM and GPU memory are limited on real systems). It means it will be faster, cheaper to make, it will require less data to train etc., but it will also not be as sophisticated as you might be expecting from an AI. For example, the original model in the article we’re basing our work on has 44 mln of parameters, and that will be, I think, already too big for us; on the other hand, Stable Diffusion 1.5 was released with 860 mln of parameters, and Stable Diffusion 3 is expected to have 8 bln. (The number of parameters of course doesn’t tell the whole story).

- It should be predictable for the artists (if I manage to reach the results I want). It should do exactly what it’s supposed to do and nothing more.

- It doesn’t advance the technology. The article on which we’re basing the work is already nine years old; and while some parts of the project are kind of experimental (how to include the details of the brush and the brush size without a so-called dense layer?), there is nothing all that unique or innovative about our approach. (In fact I got the impression that the authors of that article aimed for maximum simplicity, exemplified by a very homogenous design of the network and the choice of an optimizer that doesn’t need any manual configuration or fine-tuning. That’s consistent with our goals at Krita as well).

More Information

The article the project is based on: https://esslab.jp/~ess/publications/SimoSerraSIGGRAPH2016.pdf

And this is a document I wrote about the project. Keep in mind that it was written for Intel, so with developers in mind, but at least some sections might be interesting for artists as well. https://files.kde.org/krita/project/SmartLineartProject.pdf

I will be writing more posts and updates throughout the duration of the project.

Short Summary

We’re going to make a feature called Fast Line Art that will use neural networks. The project is sponsored by Intel. It won’t be generative AI, it will just translate a sketch into a line art, without adding new details, and it will work very similarly to existing filters, without sending anything to any server or do anything online.

Ending Notes

In the comments, please try to keep the discussion civilized and try to be concise (sometimes threads here get very long, making it difficult for many people to read through it and/or join the conversation). But please do share any concerns or ask any questions you might have, and I’ll try to address them.